Deepfake: A Study on Knowledge of Media Practitioners in Cotabato Province, Philippines

Abstract

Purpose of the study: Deepfake technology uses an Artificial Intelligence algorithm to convincingly manipulate images, videos, and audio thereby replacing individual's likeness with that of another. The primary objective of the study is to assess the level of knowledge regarding deepfakes of media practitioners in Cotabato Province. Additionally, it examines the relationship between the practitioners’ socio-demographic characteristics, such as their years of experience in the media field and the undergraduate academic program taken, and their level of knowledge on deepfake.

Methodology: The study employed a descriptive-correlational research design to examine the relationship between selected variables. A cluster random sampling technique was used to determine the sample. The second district of Cotabato Province comprises 15 radio stations, from which 9 stations were randomly selected as representative clusters. A total of 25 media practitioners participated in the study, including news writers, DJs, reporters, and broadcasters. Technicians were intentionally excluded from the sample, as the focus was on individuals directly involved in the production and dissemination of news and information.

Main Findings: Findings revealed that media practitioners demonstrated good knowledge of deepfake content, its creation, and the software commonly used for generating deepfakes. However, their knowledge was limited when it came to deepfake detection and the software tools available for identifying such manipulated content. Furthermore, a significant relationship was found between knowledge of deepfake content and the years of experience as a media practitioner. In contrast, no significant correlation was observed between years of experience and knowledge of deepfake creation, detection, or the corresponding software used for either process.

Novelty/Originality of this study: Years of experience in media practice correlate positively with deepfake content knowledge, but not with knowledge of detection or creation tools, suggesting that experiential exposure does not necessarily equate to technical proficiency.

References

A. Aggarwal and R. Garg, "Fake news on social media: The impact on society," Information Systems Frontiers, vol. 24, pp. 1191–1210, 2022. [Online]. Available: https://doi.org/10.1007/s10796-022-10242-z

N. Paunović, “Disclosing and disseminating fake news through media and freedom of expression,” in Mediji, Kazneno Pravo i Pravosuđe, Belgrade, Serbia: Institute of Criminological and Sociological Research, 2024, pp. 107–116. [Online]. Available: https://www.iksi.ac.rs/izdanja/mediji_kazneno_pravo_i_pravosudje_2024.pdf#page=107

World Economic Forum, Global Risks Report 2022: Emerging Technologies and Security Threats, Jan. 2022. [Online]. Available: https://www.weforum.org/publications/global-risks-report-2022/

X.-J. Lim, J. Zhu, D. Leong, and S. Foo, "Deepfake awareness and public perception: The gap between concern and detection ability," Cyberpsychology, Behavior, and Social Networking, vol. 24, no. 3, pp. 170–175, 2021. [Online]. Available: https://doi.org/10.1089/cyber.2020.0100

A. Godulla, C. P. Hoffmann, and D. Seibert, “Dealing with deepfakes–an interdisciplinary examination of the state of research and implications for communication studies,” SCM Studies in Communication and Media, vol. 10, no. 1, pp. 72–96, 2021. [Online]. Available: https://doi.org/10.5771/2192-4007-2021-1-72

K. Dunwoodie, L. Macaulay, and A. Newman, “Qualitative interviewing in the field of work and organisational psychology: Benefits, challenges and guidelines for researchers and reviewers,” Applied Psychology, vol. 72, no. 2, pp. 863–889, 2023. [Online]. Available: https://doi.org/10.1111/apps.12414

D. Fallis, “The epistemic threat of deepfakes,” Philosophy & Technology, vol. 34, no. 4, pp. 623–643, 2021. [Online]. Available: https://doi.org/10.1007/s13347-020-00419-2

C. Vaccari and A. Chadwick, “Deepfakes and disinformation: Exploring the impact of synthetic political video on uncertainty and trust in news,” Social Media + Society, vol. 6, no. 1, 2020. [Online]. Available: https://doi.org/10.1177/2056305120903408

M. Westerlund, “The emergence of deepfake technology: A review,” Technology Innovation Management Review, vol. 9, no. 11, pp. 40–53, 2019. [Online]. Available: https://doi.org/10.22215/timreview/1282

D. M. West, “How to combat fake news and disinformation,” Brookings Institution, 2021. [Online]. Available: https://www.brookings.edu/research/how-to-combat-fake-news-and-disinformation/

B. Paris and J. Donovan, “Deepfakes and cheap fakes: The manipulation of audio and visual evidence,” Data & Society, 2019. [Online]. Available: https://datasociety.net/library/deepfakes-and-cheap-fakes/

T. T. Nguyen, C. M. Nguyen, D. T. Nguyen, D. T. Nguyen, and S. Nahavandi, “Deep learning for deepfakes creation and detection: A survey,” arXiv preprint arXiv:1909.11573, 2019. [Online]. Available: https://arxiv.org/abs/1909.11573

A. Chaudhuri, M. Groh, and R. Mehta, “Human performance in detecting deepfakes: A systematic review and meta-analysis of 56 papers,” Computers in Human Behavior Reports, vol. 16, p. 100538, 2024. [Online]. Available: https://doi.org/10.1016/j.chbr.2024.100538

E. C. Tandoc, Z. W. Lim, and R. Ling, “Defining ‘Fake News’: A typology of scholarly definitions,” Digital Journalism, vol. 6, no. 2, pp. 137–153, 2018. [Online]. Available: https://doi.org/10.1080/21670811.2017.1360143

M. H. Maras and A. Alexandrou, “Determining authenticity of video evidence in the age of artificial intelligence and in the wake of deepfake videos,” The International Journal of Evidence & Proof, vol. 23, no. 3, pp. 255–262, 2019. [Online]. Available: https://doi.org/10.1177/1365712718807226

V. Frissen, M. Van Lieshout, and L. Kool, “Deepfakes: Tackling threats and strengthening democracy,” Journal of Information Technology & Politics, vol. 18, no. 4, pp. 355–369, 2021. [Online]. Available: https://doi.org/10.1080/19331681.2021.1950705

S. Johnson and J. Seaton, “Journalism and misinformation in the age of deepfakes,” Digital Journalism, vol. 9, no. 3, pp. 378–394, 2021. [Online]. Available: https://doi.org/10.1080/21670811.2020.1848955

D. M. West, J. R. Allen, and J. Gibbs, “How artificial intelligence is transforming the world,” Brookings Institution, 2019. [Online]. Available: https://www.brookings.edu/research/how-artificial-intelligence-is-transforming-the-world/

M. Pawelec, “Deepfakes and democracy (theory): How synthetic audio visual media for disinformation and hate speech threaten core democratic functions,” Digital Society, vol. 1, no. 2, p. 19, 2022. [Online]. Available: https://doi.org/10.1007/s44206-022-00010-6

T. Trinh and J. Liu, “Manipulating faces for identity theft via morphing and deepfake: Digital privacy,” in Handbook of Statistics, vol. 48, pp. 223–241, 2023. [Online]. Available: https://doi.org/10.1016/bs.host.2022.12.003

K. Emmerson, Y. Huang, and J. Taft, “Creative media applications of synthetic voices and deepfakes: A typology and research agenda,” AI & Society, vol. 38, pp. 107–118, 2023. [Online]. Available: https://doi.org/10.1007/s00146-024-02072-1

E. Temir, “Deepfake: New Era in The Age of Disinformation & End of Reliable Journalism,” Selçuk İletişim, vol. 13, no. 2, pp. 1009–1024, 2020. [Online]. Available: https://doi.org/10.18094/JOSC.685338

I. Kaate, J. Salminen, S. G. Jung, H. Almerekhi, and B. J. Jansen, “How do users perceive deepfake personas? Investigating deepfake user perception and its implications for HCI,” in Proc. ACM Int. Conf., 2023. [Online]. Available: https://doi.org/10.1145/3605390.3605397

J. H. Kietzmann, I. P. McCarthy, L. W. Lee, and H. Bugshan, “Deepfakes: Trick or treat?,” Business Horizons, vol. 63, no. 2, pp. 135–146, 2020. [Online]. Available: https://doi.org/10.1016/j.bushor.2019.11.006

J.-E. Choi, K. Schäfer, and S. Zmudzinski, “Introduction to audio deepfake generation: Academic insights for non experts,” in Proc. 3rd ACM Int. Workshop Multimedia AI Against Disinformation (MAD ’24), pp. 1–10, 2024. [Online]. Available: https://doi.org/10.1145/3643491.3660286

Y. Mirsky and W. Lee, “The creation and detection of deepfakes: A survey,” ACM Comput. Surv., vol. 54, no. 1, pp. 1–41, 2021. [Online]. Available: https://doi.org/10.1145/3425780

L. Weidinger, J. Mellor, M. Rauh, C. Griffin, J. Uesato, P.-S. Huang, M. Cheng, M. Glaese, B. Balle, A. Kasirzadeh, Z. Kenton, S. Brown, W. Hawkins, T. Stepleton, C. Biles, A. Birhane, J. Haas, R. Rimell, L. A. Hendricks, and I. Gabriel, “Ethical and social risks of harm from language models: A framework and classification,” arXiv preprint arXiv:2112.04359, 2021. [Online]. Available: https://arxiv.org/abs/2112.04359

K. Liu, I. Perov, D. Gao, N. Chervoniy, K. Liu, and W. Zhang, “DeepFaceLab: Integrated, flexible and extensible face-swapping framework,” Pattern Recognit., vol. 141, Art. no. 109628, 2023. [Online]. Available: https://doi.org/10.1016/j.patcog.2023.109628

M. Masood, M. Nawaz, K. M. Malik, A. Javed, A. Irtaza, and H. M. Malik, “Deepfakes generation and detection: State of the art, open challenges, countermeasures, and way forward,” Appl. Intell., vol. 52, pp. 11233–11263, 2022. [Online]. Available: https://doi.org/10.1007/s10489-022-03766-z

I. J. Goodfellow et al., “Generative adversarial nets,” in Advances in Neural Information Processing Systems, vol. 27, Z. Ghahramani et al., Eds. Curran Associates, 2014, pp. 2672–2680. [Online]. Available: https://doi.org/10.5555/2969033.2969125

A. Xiao and X. Huang, “Wired to seek, comment and share? Examining the relationship between personality, news consumption and misinformation engagement,” Online Information Review, vol. 46, no. 9, pp. XX–XX, 2022. [Online]. Available: https://doi.org/10.1108/OIR-10-2021-0520

Y. Li, M.-C. Chang, and S. Lyu, “In Ictu Oculi: Exposing AI generated fake face videos by detecting eye blinking,” in Proc. 2018 IEEE Int. Workshop Inf. Forensics Secur. (WIFS), 2018, pp. 1–7. [Online]. Available: https://doi.org/10.1109/WIFS.2018.8630787

Z. Almutairi and H. Elgibreen, “A review of modern audio deepfake detection methods: Challenges and future directions,” Algorithms, vol. 15, no. 5, p. 155, 2022. [Online]. Available: https://doi.org/10.3390/a15050155

D. Pan, L. Sun, R. Wang, X. Zhang, and R. O. Sinnott, “Deepfake Detection through Deep Learning.” In Proceedings of the 2020 IEEE/ACM International Conference on Big Data Computing, Applications and Technologies (BDCAT), Leicester, UK, Dec. 7–10, 2020. DOI: https://doi.org/10.1109/BDCAT50828.2020.00001

A. Heidari, N. Jafari Navimipour, H. Dag, and M. Unal, “Deepfake detection using deep learning methods: A systematic and comprehensive review,” Wiley Interdiscip. Rev. Data Min. Knowl. Discov., vol. 14, no. 2, p. e1520, 2024. [Online]. Available: https://doi.org/10.1002/widm.1520

A. V. Nadimpalli and A. Rattani, “ProActive DeepFake Detection using GAN-based Visible Watermarking,” ACM Transactions on Multimedia Computing, Communications, and Applications, vol. 20, no. 11, Article 344, pp. 1–27, Sept. 2024. [Online]. Available: https://doi.org/10.1145/3625547

L. Verdoliva, “Media forensics and deepfakes: An overview,” IEEE J. Sel. Topics Signal Process., vol. 14, no. 5, pp. 910–932, 2020. [Online]. Available: https://doi.org/10.1109/JSTSP.2020.3002101

I. Amerini, M. Barni, S. Battiato, P. Bestagini, G. Boato, T. S. Bonaventura, V. Bruni, R. Caldelli, F. De Natale, R. De Nicola, L. Guarnera, S. Mandelli, G. L. Marcialis, M. Micheletto, A. Montibeller, G. Orrù, A. Ortis, P. Perazzo, G. Puglisi, D. Salvi, et al., “Deepfake media forensics: Status and future challenges,” Journal of Imaging, vol. 11, no. 3, Art. no. 73, 2025. [Online]. Available: https://doi.org/10.3390/jimaging11030073

D. Afchar, V. Nozick, J. Yamagishi, and I. Echizen, “MesoNet: A compact facial video forgery detection network,” in Proc. 2018 IEEE Int. Workshop Inf. Forensics Security (WIFS), 2018, pp. 1–7. [Online]. Available: https://doi.org/10.1109/WIFS.2018.8630761

D. Guera and E. J. Delp, “Deepfake video detection using recurrent neural networks,” in Proc. 2018 IEEE Int. Conf. Adv. Video Signal Based Surveillance (AVSS), 2018, pp. 1–6. [Online]. Available: https://doi.org/10.1109/AVSS.2018.8639163

A. Vizoso, M. Vaz Álvarez, and X. López García, “Fighting deepfakes: Media and internet giants’ converging and diverging strategies against hi tech misinformation,” Media and Communication, vol. 9, no. 1, pp. 291–300, 2021. [Online]. Available: https://doi.org/10.17645/mac.v9i1.3494

L. Floridi and M. Chiriatti, “GPT-3: Its nature, scope, limits, and consequences,” Minds and Machines, vol. 30, no. 4, pp. 681–694, 2020. [Online]. Available: https://doi.org/10.1007/s11023-020-09548-1

S. Kreps, R. McCain, and M. Brundage, “All the news that’s fit to fabricate: AI-generated text as a tool of media misinformation,” Journal of Experimental Political Science, vol. 9, no. 1, pp. 104–117, 2022. [Online]. Available: https://doi.org/10.1017/XPS.2021.28

E. C. Tandoc, Z. W. Lim, and R. Ling, “Defining ‘fake news’: A typology of scholarly definitions,” Digital Journalism, vol. 6, no. 2, pp. 137–153, 2018. [Online]. Available: https://doi.org/10.1080/21670811.2017.1360143

O. L. Haimson, “Deepfake detection and the evolving digital threat landscape: Implications for media professionals,” Journal of Media Literacy Education, vol. 15, no. 1, pp. 45–58, 2023. [Online]. Available: https://doi.org/10.23860/JMLE-2023-15-1-4

E. Blancaflor, A. P. Ong, A. L. E. Navarro, K. F. Sudo, D. A. Villasor, and C. Valero, “An in-depth analysis of revenge porn and blackmailing on Philippine social media and its effects on the people affected,” in Proc. 2023 6th Int. Conf. Inf. Sci. Syst. (ICISS), 2023, pp. 107–112. [Online]. Available: https://doi.org/10.1145/3625156.3625172

A. Birrer and N. Just, “What we know and don’t know about deepfakes: An investigation into the state of the research and regulatory landscape,” New Media & Society, 2024. [Online]. Available: https://doi.org/10.1177/14614448241253138

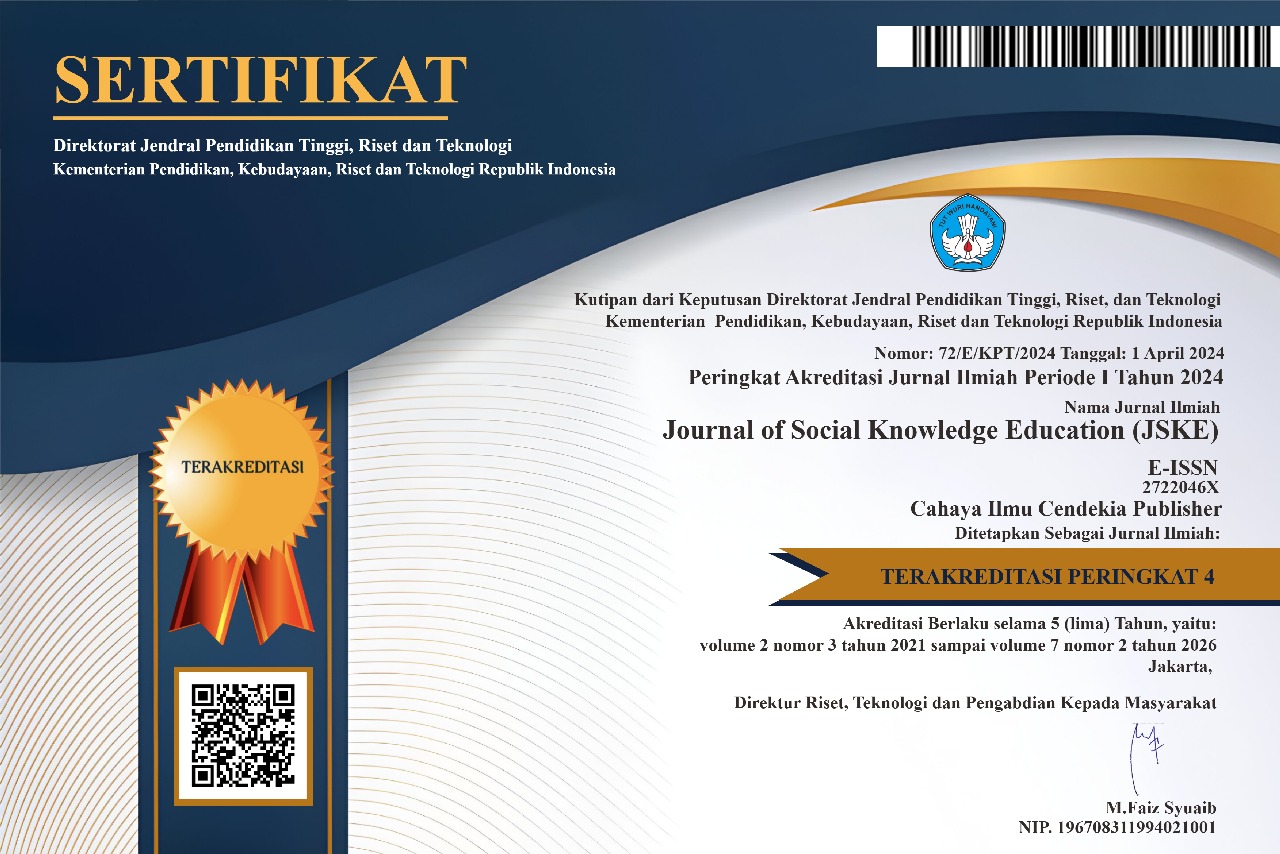

Copyright (c) 2025 Aravila Demon, Vilma Santos

This work is licensed under a Creative Commons Attribution 4.0 International License.

Authors who publish with this journal agree to the following terms:

- Authors retain copyright and acknowledge that the Journal of social knowledge education (JSKE) is the first publisher licensed under a Creative Commons Attribution 4.0 International License.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial publication in this journal.

- Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and during the submission process, as it can lead to productive exchanges and earlier and greater citation of published work.

.png)

.png)